bit of a preamble: this post is “too long for email.” yeah, it’s like, make tea first long. godspeed!

i've been working with ai systems every day for months now, teaching them to predict crystal structures and design new materials. the better they get, the more something gnaws at me. last week i watched our model solve in thirty seconds what took me three weeks to figure out during my first month working at the materials design group, and instead of feeling proud, i felt... empty?

there's this moment i keep thinking about. it's 2am(for some reason all my latest posts involve me working late at night, this post included… its being drafted at 12:13am lol), i'm in the lab (technically just my bedroom but it feels like a lab at 2am), and i've been debugging the same piece of code for four hours. my eyes hurt. i've tried seventeen different approaches. i'm reading the same documentation for the fifth time when suddenly - oh. the problem isn't where i thought it was. it's three functions upstream, a tiny assumption i made without realising

that moment of revelation after hours of confusion? ai will never have that. it will never know the specific joy of being stupid for four hours and then suddenly not being stupid anymore

we're building systems that can skip straight to the answer, and everyone's excited about the efficiency. but i keep thinking about what we lose when we optimise away the struggle

remember learning to ride a bike? the specific texture of failing — wobbly starts, scraped knees, that moment when balance suddenly clicks? imagine if instead we could just download the ability. would we? probably. should we? i'm not sure

when i first started learning about crystal structures, i spent weeks visualising atoms in my head wrong. i thought they packed in ways that were geometrically impossible. my professor could have corrected me immediately, but he let me figure it out myself. when i finally understood why my mental model was broken, the correct version embedded itself so deeply i could feel it in my bones, and i haven’t forgotten it since

ai would never have had the wrong model to begin with. it would start with the right answer. efficient? yes. beautiful? i don't know

there's this phrase that haunts me: "solved problem." as in, "protein folding is basically a solved problem now." "image generation is a solved problem." soon it'll be "materials discovery is a solved problem"

but when we say "solved" we mean "we can get the right answer." we don't mean "we understand why." we don't mean "we've traced every path that leads there." we definitely don't mean "we've felt the shape of the problem in our hands"

ai can accelerate discovery, but intuition and experience remain essential for interpreting results.

a perspective on materials informatics: state-of-the-art and challenges. lookman, t. et al. (2016).

i think about the mathematicians who spent lifetimes on problems that computers now solve instantly. were those lifetimes wasted? or is there something in the attempt itself, in the friction of human mind against universe, that matters as much as the solution?

last month, i was training a new model to work with materials domain tools and produce reliable answers. despite weeks of effort, it kept failing in peculiar ways, predicting impossible densities, suggesting non-existent crystal structures, outputting complete nonsense

i spent days questioning everything: adjusting hyperparameters, redesigning the entire architecture, even doubting whether my workflow hypothesis could actually work. i rewrote the data pipeline three times. i tried different embedding strategies. i scrutinised every line of the model architecture

then i found it. a single line in my preprocessing script: i'd written value * 1e-10 instead of value * 1e-9 when converting nanometres to metres. every structural parameter in my training data was off by an order of magnitude

when i fixed that one character, the model immediately started working perfectly, predicting properties within experimental error, suggesting sensible compositions, understanding crystal symmetries

my first thought was "i wasted three days on a missing zero"

my second thought was "no, i learnt what failure looks like in seven different ways. i know exactly which parameters matter and which don't. i understand my model's behaviour at a level i never would have reached if everything had worked first time"

the thing is - ai makes mistakes too. we call them hallucinations, as if the model is dreaming. but when ai fails, it doesn't learn the way i did. it doesn't build intuition about what wrong feels like. it just gets retrained until the errors stop. no memory of the struggle, no scar tissue from the failure, no hard-won instinct for when something's about to break(also existentially but to its benefit, no dread about trying something wild, no hesitation born from past failures, no little voice saying "remember what happened last time?" just pure, naive willingness to attempt the impossible every single time) lol

here's what really scares me: we're so impressed by ai's capabilities that we're starting to lose sight of the human process(big generalisation here - i don’t think fundamentally that the human process is all about struggle, but i do think it has a part to play in our survival thus far). why spend months learning to code when ai can generate it? why struggle through papers when ai can summarise them? why think hard about problems when ai can think faster?

“struggle isn’t an option: it’s a biological requirement… deep practice is built on a paradox: struggling in certain targeted ways—operating at the edges of your ability, where you make mistakes—makes you smarter.”

the talent code (daniel coyle)

but thinking isn't just about getting to the answer. it's about developing the mental muscles that get you there. it's about building intuition through repetition. it's about earning your understanding

when you struggle with a concept for hours before grasping it, you're not just learning the concept. you're learning how to struggle. you're building patience, developing problem-solving strategies, discovering your own cognitive patterns. you're learning what frustration feels like and how to push through it

ai experiences none of this. it pattern-matches its way to solutions without ever having been confused. it generates without having struggled to create. it answers without having questioned

i'm not saying ai is bad. i use it every day. it helps me explore possibilities i could never check manually. it spots patterns i'd miss. it handles the mechanical parts so i can focus on the creative ones (and more recently philosophic ones too)

but i worry we're so dazzled by the efficiency that we forget why inefficiency mattered

science isn't just about having the right answers. it's about the culture of argument, the late-night debates, the weeks spent pursuing dead ends. it's about learning to trust your intuition and learning when not to. it's about developing taste through trial and error

(probably the best series of sentences ive ever strung together or maybe the fumbling one from the other post)

when we were kids, we'd take apart broken radios just to see how they worked. we'd spend entire afternoons trying to fix them, usually failing. nobody does that anymore - why bother when you can just look up how radios work? but those afternoons taught us something that no explanation could: the physical reality of how things fit together, the frustration of tiny screws, the satisfaction of understanding through direct experience

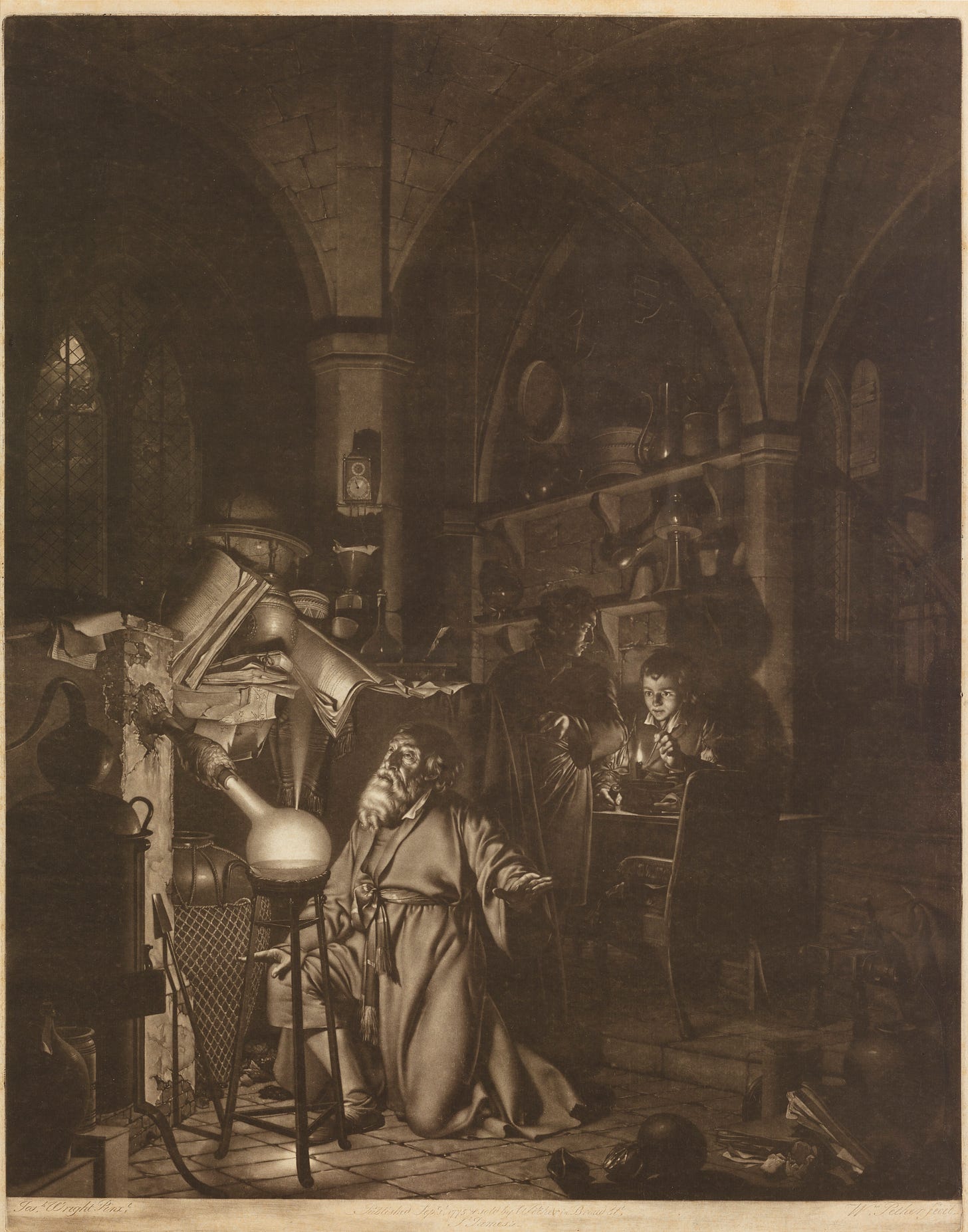

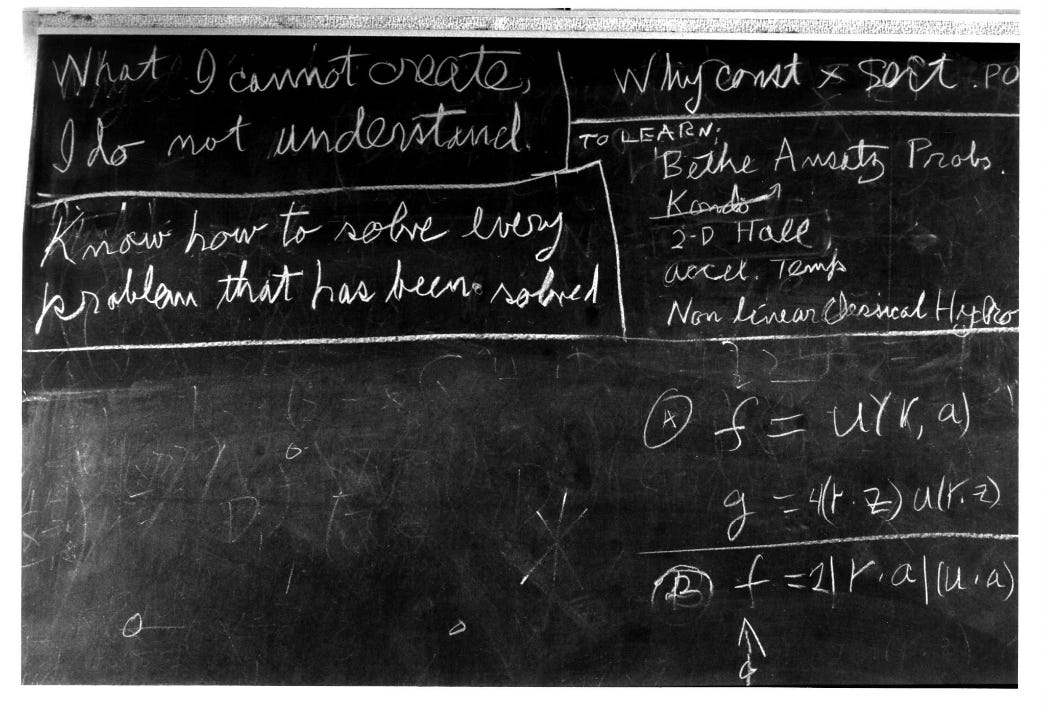

there's this story about richard feynman refusing to read other physicists' papers before trying to derive their results himself. wildly inefficient. potentially redundant. but he said it was the only way to truly understand - to think the thoughts himself, to hit the same walls, to find his own path through

imagine telling that story to someone training an ai model. they'd laugh. why recreate what already exists? why waste time on solved problems? (currently my argument in support of companies that are gpt wrappers btw though i do recognise the value of building new models for specific use cases, but you also miss out on some things… that's a post for another day)

but feynman wasn't trying to be efficient. he was trying to be a physicist. and being a physicist meant thinking physics thoughts, not just knowing physics answers

the other day i was helping a student debug their code. i could see the problem immediately - wrong variable scope. but instead of telling them, i asked questions. watched them trace through their logic. saw the moment when understanding dawned

"why didn't you just tell me?" they asked after

because the struggle was the lesson. the answer was just a byproduct

this is what i mean by the beauty of raw human process. it's messy and slow and full of unnecessary loops. but those loops are where learning lives. even though its a little painful. that messiness is where intuition develops. that slowness is where deep understanding crystallises

i keep coming back to pottery. there's a famous experiment where a ceramics class was divided in two. half were graded on quantity - make as many pots as possible. half were graded on quality - make one perfect pot

the quantity group made better pots. they learned through repeated iteration, through failure, through the physical experience of clay in their hands. the quality group sat around theorising about the perfect pot

ai is all quality group. it can generate the perfect pot on the first try. but it will (for now at least) not know what clay feels like between its fingers. it will never understand the specific disappointment of a pot collapsing on the wheel. it will never feel the particular joy of finally centering clay after a hundred attempts

we're building systems that give us answers without questions, solutions without struggle, creation without craft. and yes, it's powerful. yes, it's useful. yes, it will solve problems we never could alone

but let's not pretend it's the same thing as human understanding. let's not optimise away the fumbling, the confusion, the tedious practice. let's not forget that the inefficiency was never a bug, it was the feature

because the beauty of being human isn't in being right. it's in the specific texture of being wrong, over and over, until suddenly you're not. it's in the accumulated scar tissue of every failed attempt. it's in knowing not just what works, but feeling in your bones all the ways it doesn't

ai will never have that. and maybe that's okay. maybe ai can be our calculator while we remain the mathematicians. maybe it can handle the solved problems while we keep pushing into the unsolved ones

but only if we remember that the struggle isn't something to optimise away. it's the whole point

only if we keep choosing the long way sometimes, just because it's ours

only if we remember that efficiency isn't everything. that sometimes the most important things happen in the space between question and answer, in the friction of figuring it out, in the raw, messy, beautiful process of being a human trying to understand the universe

even when - especially when - there's an easier way

where my argument falls apart

after writing the initial draft of this and sharing it with a friend(i’m quite poetic, i know), they pushed back hard, and they might be right…

"you're romanticising struggle," they said. "when you spent three days on that typo, what if you'd spent those three days exploring seven different successful approaches instead?"

fuck. they had a point

i've been treating all inefficiency as sacred, but maybe that's just nostalgia dressed up as wisdom. when i talk about the beauty of debugging for hours, am i actually defending something valuable, or am i just attached to my own suffering? we're pattern-matching machines who release dopamine when we solve puzzles - that doesn't necessarily make puzzle-solving the deepest path to truth

here's what really made me pause: every hour i spend on the basics is an hour not spent at the frontier. if ai can compress years of learning into days, maybe that gives us more time to struggle with actually unsolved problems. maybe i'm defending the wrong inefficiencies (though note i do not mean ignore a fundamental understanding of the basics to reach for the advanced, im certain that way you’ll almost always be wasting your time)

think about feynman again. yes, he derived results himself, but he didn't insist on rediscovering arithmetic. he didn't rebuild number theory from scratch. he chose his inefficiencies strategically, at the edge of known physics. maybe that's what i missed, it's not about preserving all struggle, it's about choosing the right struggles

my friend asked: "does a surgeon who learnt anatomy through ai-assisted visualisation understand the human body less deeply than one who spent years with cadavers?"

i wanted to say yes, but honestly? i don't know. the understanding might be different(and if its different how different?), but why am i so sure it's inferior?

maybe what i'm really afraid of isn't that ai will eliminate struggle, but that we'll forget to seek it out. that we'll use these tools to avoid all friction instead of using them to attempt harder things. that we'll optimise for ease instead of growth

the pottery example i loved so much? the quantity group didn't win because struggle is beautiful. they won because they had faster feedback loops. what if they'd had ai showing them why each pot failed? they might have made even better pots in half the time

perhaps the real risk isn't efficiency itself, but becoming addicted to ease. if we use ai to skip learning programming entirely, we're impoverished. but if we use it to quickly grasp syntax so we can struggle with designing new algorithms? maybe we go further than we could have imagined

thinking about antibiotics. we developed them so we wouldn't have to "struggle beautifully" with tuberculosis. we invented writing so we wouldn't have to "earn" every piece of knowledge through direct experience. maybe ai is just the next step, not eliminating struggle, but relocating it

the beauty of raw human process isn't going anywhere. it's just moving upstream, to places we couldn't reach before. we'll still fumble and fail and spend hours being confused - just about harder problems. we'll still have 2am revelations - just about questions we couldn't even formulate before

so maybe my whole framing is wrong. maybe it's not about old way versus new way.

maybe it's about making sure that as our tools get more powerful, our ambitions scale accordingly. that we use ai not to do less, but to attempt more

the danger isn't that we'll stop struggling. it's that we'll struggle with the wrong things. that we'll preserve inefficiencies out of nostalgia instead of choosing new ones out of ambition. that we'll defend the beauty of being bad at things instead of finding beautiful new things to be bad at

i see now that my argument assumes struggle has inherent value. but maybe value comes from what we're struggling toward, not the struggle itself. maybe i've been so focused on preserving the soul of human experience that i forgot the point is to expand it

still. something in me resists this logic. something still believes that those hours of confusion, that specific texture of not-knowing-then-knowing, that intimacy with failure - that it matters in ways we don't fully understand yet. even if i can't defend it perfectly

one of the beautiful things about science is that it allows us to experience the pleasure of being stupid again and again. … the crucial lesson was that the scope of things I didn’t know wasn’t merely vast; it was, for all practical purposes, infinite

the importance of stupidity in scientific research (martin a. schwartz)

maybe that's okay. maybe it's good to have people like me clinging to inefficiency (at least partially - im still very much pro-ai, i think i just needed to stop and reflect a bit about where all this is headed especially now as i build ai things of my own) while others charge toward optimisation. maybe we need both - the nostalgics and the accelerationists, the pottery-makers and the algorithm-designers, the people who insist on doing things by hand and the people who ask why the hell would you do things by hand

but i'm less sure than i was when i started writing. which, ironically, might be the most human thing about this whole essay

footnotes

on the process of discovery in mathematics - William Thurston's beautiful essay on mathematical understanding

cognitive load theory - why struggle might actually impede learning

the case for effortless mastery - counterarguments to valorising struggle

the importance of stupidity in scientific research (schwartz): a must-read if you find yourself wrestling with the discomfort of not knowing

the glass cage (carr): explores what’s lost when automation replaces human effort and has this awesome quote - “when we become dependent on our tools, we lose the ability to innovate… the more we automate, the more we distance ourselves from the work that gives us purpose” (purpose - thats another thing i should probably write about) - in addition go read more of nicholas carr’s books he’s a legend

the alignment problem (christian): alignment is quite fascinating and theres lots of cool sources out there (my faves are from anthropic, but this book is a good intro and looks at what it means for ai to “understand,” and what’s uniquely human about our own learning

why greatness cannot be planned (stanley & lehman): this one had a resurgence on twitter(x) last autumn/winter but it argues for the necessity of open-ended exploration and the limits of goal-driven efficiency

the case against efficiency (the new atlantis, ezra klein show): both the article and podcast offer philosophical and practical critiques of efficiency as a societal value - “efficiency is not always a virtue; sometimes it is the enemy of meaning”

Cognitive Hygiene: why you need to make thinking hard again

how to solve it (pólya): a classic on the art of problem-solving and the necessity of productive struggle.

the talent code (coyle): on how deep practice and “desirable difficulty” build expertise.